Deploying multiple MCP services with Ray Serve#

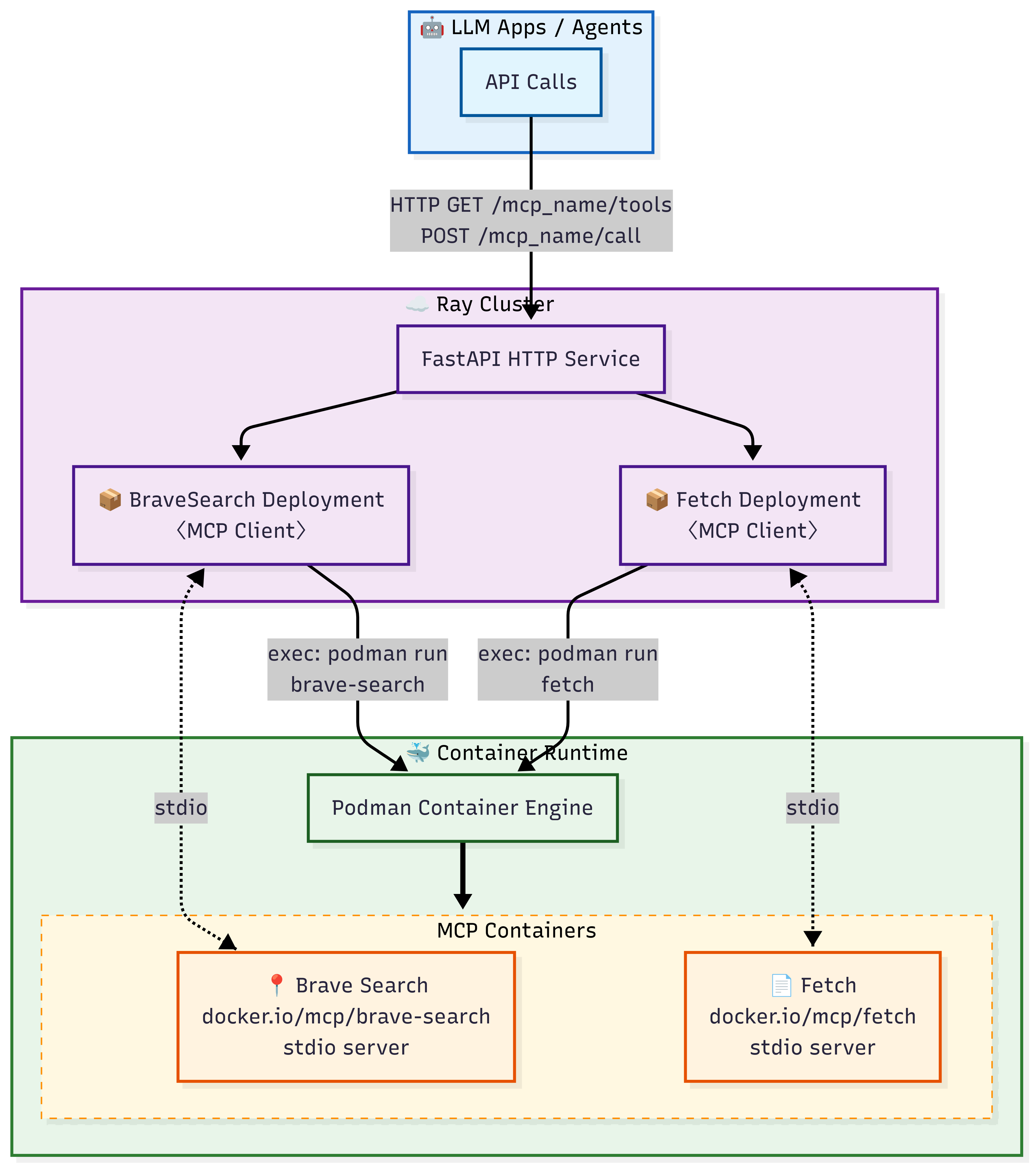

This tutorial deploys two MCP services—Brave Search and Fetch—using Ray Serve, leveraging features like autoscaling, fractional CPU allocation, and seamless multi-service routing.

Combined with Anyscale, this setup allows you to run production-grade services with minimal overhead, auto-provision compute as needed, and deploy updates without downtime. Whether you’re scaling up a single model or routing across many, this pattern provides a clean, extensible path to deployment.

It’s also very easy to add more MCP services—just call build_mcp_deployment for each new service and bind it in the router.

The following architecture diagram illustrates deploying multiple MCP Docker images with Ray Serve:

Prerequisites#

Ray [Serve], already included in the base Docker image

Podman

A Brave API key set in your environment (

BRAVE_API_KEY)MCP Python library

Dependencies#

Build Docker image for Ray Serve deployment

In this tutorial you need to build a Docker image for deployment on Anyscale using the Dockerfile included in this code repo.

The reason is that when you run apt-get install -y podman (e.g. installing a system package) from the workspace terminal, it only lives in the Ray head node and is not propagated to your Ray worker nodes.

After building the Docker image, navigate to the Dependencies tab in Workspaces and select the corresponding image you just created, and set the BRAVE_API_KEY environment variable.

Note

This Docker image is provided solely to deploy the MCP with Ray Serve. Ensure that your MCP docker images, like docker.io/mcp/brave-search, are already published to your own private registry or public registry.

Common issues#

FileNotFoundError: [Errno 2] No such file or directory

Usually indicates Podman isn’t installed correctly. Verify the Podman installation.

KeyError: ‘BRAVE_API_KEY’

Ensure you have exported BRAVE_API_KEY in your environment or included it in your dependency configuration.

1. Create the deployment file#

Save the following code as multi_mcp_ray_serve.py:

import asyncio

import logging

import os

from contextlib import AsyncExitStack

from typing import Any, Dict, List, Optional

from fastapi import FastAPI, HTTPException, Request

from ray import serve

from ray.serve.handle import DeploymentHandle

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

logger = logging.getLogger("multi_mcp_serve")

def _podman_args(

image: str,

*,

extra_args: Optional[List[str]] = None,

env: Optional[Dict[str, str]] = None,

) -> List[str]:

args = ["run", "-i", "--rm"]

for key, value in (env or {}).items():

if key.upper() == "PATH":

continue

args += ["-e", f"{key}={value}"]

if extra_args:

args += extra_args

args.append(image)

return args

class _BaseMCP:

_PODMAN_ARGS: List[str] = []

_ENV: Dict[str, str] = {}

def __init__(self):

self._ready = asyncio.create_task(self._startup())

async def _startup(self):

params = StdioServerParameters(

command="podman",

args=self._PODMAN_ARGS,

env=self._ENV,

)

self._stack = AsyncExitStack()

stdin, stdout = await self._stack.enter_async_context(stdio_client(params))

self.session = await self._stack.enter_async_context(ClientSession(stdin, stdout))

await self.session.initialize()

logger.info("%s replica ready", type(self).__name__)

async def _ensure_ready(self):

await self._ready

async def list_tools(self) -> List[Dict[str, Any]]:

await self._ensure_ready()

resp = await self.session.list_tools()

return [

{"name": t.name, "description": t.description, "input_schema": t.inputSchema}

for t in resp.tools

]

async def call_tool(self, tool_name: str, tool_args: Dict[str, Any]) -> Any:

await self._ensure_ready()

return await self.session.call_tool(tool_name, tool_args)

async def __del__(self):

if hasattr(self, "_stack"):

await self._stack.aclose()

def build_mcp_deployment(

*,

name: str,

docker_image: str,

num_replicas: int = 3,

num_cpus: float = 0.5,

autoscaling_config: Optional[Dict[str, Any]] = None,

server_command: Optional[str] = None,

extra_podman_args: Optional[List[str]] = None,

env: Optional[Dict[str, str]] = None,

) -> serve.Deployment:

"""

- If autoscaling_config is provided, Ray Serve will autoscale between

autoscaling_config['min_replicas'] and ['max_replicas'].

- Otherwise it will launch `num_replicas` fixed replicas.

"""

deployment_env = env or {}

podman_args = _podman_args(docker_image, extra_args=extra_podman_args, env=deployment_env)

if server_command:

podman_args.append(server_command)

# Build kwargs for the decorator:

deploy_kwargs: Dict[str, Any] = {

"name": name,

"ray_actor_options": {"num_cpus": num_cpus},

}

if autoscaling_config:

deploy_kwargs["autoscaling_config"] = autoscaling_config

else:

deploy_kwargs["num_replicas"] = num_replicas

@serve.deployment(**deploy_kwargs)

class MCP(_BaseMCP):

_PODMAN_ARGS = podman_args

_ENV = deployment_env

return MCP

# -------------------------

# HTTP router code

# -------------------------

api = FastAPI()

@serve.deployment

@serve.ingress(api)

class Router:

def __init__(self,

brave_search: DeploymentHandle,

fetch: DeploymentHandle) -> None:

self._mcps = {"brave_search": brave_search, "fetch": fetch}

@api.get("/{mcp_name}/tools")

async def list_tools_http(self, mcp_name: str):

handle = self._mcps.get(mcp_name)

if not handle:

raise HTTPException(404, f"MCP {mcp_name} not found")

try:

return {"tools": await handle.list_tools.remote()}

except Exception as exc:

logger.exception("Listing tools failed")

raise HTTPException(500, str(exc))

@api.post("/{mcp_name}/call")

async def call_tool_http(self, mcp_name: str, request: Request):

handle = self._mcps.get(mcp_name)

if not handle:

raise HTTPException(404, f"MCP {mcp_name} not found")

body = await request.json()

tool_name = body.get("tool_name")

tool_args = body.get("tool_args")

if tool_name is None or tool_args is None:

raise HTTPException(400, "Missing 'tool_name' or 'tool_args'")

try:

result = await handle.call_tool.remote(tool_name, tool_args)

return {"result": result}

except Exception as exc:

logger.exception("Tool call failed")

raise HTTPException(500, str(exc))

# -------------------------

# Binding deployments

# -------------------------

if "BRAVE_API_KEY" not in os.environ:

raise RuntimeError("BRAVE_API_KEY must be set before `serve run`.")

# Example: autoscaling BraveSearch between 1 and 5 replicas,

# targeting ~10 concurrent requests per replica.

BraveSearch = build_mcp_deployment(

name="brave_search",

docker_image="docker.io/mcp/brave-search",

env={"BRAVE_API_KEY": os.environ["BRAVE_API_KEY"]},

num_cpus=0.2,

autoscaling_config={

"min_replicas": 1,

"max_replicas": 5,

"target_ongoing_requests": 10,

},

)

# Example: keep Fetch at a fixed 2 replicas.

Fetch = build_mcp_deployment(

name="fetch",

docker_image="docker.io/mcp/fetch",

num_replicas=2,

num_cpus=0.2,

)

brave_search_handle = BraveSearch.bind()

fetch_handle = Fetch.bind()

app = Router.bind(brave_search_handle, fetch_handle)

You can run the app programmatically to launch it in the workspace:

serve.run(app)

Or you can run it using the command line shown in next section.

Note:

On the Ray cluster, use Podman instead of Docker to run and manage containers. This approach aligns with the guidelines provided in the Ray Serve multi-app container deployment documentation.

Additionally, for images such as

"docker.io/mcp/brave-search", explicitly include the"docker.io/"prefix to ensure Podman correctly identifies the image URI.This tutorial passes only the

num_cpusparameter toray_actor_options. Feel free to modify the code to include additional supported parameters as outlined here:https://docs.ray.io/en/latest/serve/resource-allocation.html#

Auto-scaling parameters are provided in

autoscaling_configas an example. For more details on configuring auto-scaling in Ray Serve deployments, see:https://docs.ray.io/en/latest/serve/configure-serve-deployment.html

https://docs.ray.io/en/latest/serve/autoscaling-guide.html

https://docs.ray.io/en/latest/serve/advanced-guides/advanced-autoscaling.html#serve-advanced-autoscaling

2. Run the service with Ray Serve in the workspace#

You can run the following command in the terminal to deploy the service using Ray Serve:

serve run multi_mcp_ray_serve:app

This starts the service on http://localhost:8000.

e. Test the service#

import requests

from pprint import pprint

# Configuration.

BASE_URL = "http://localhost:8000" # Local tooling API base URL

def list_tools(service: str):

"""

Retrieve the list of available tools for a given service.

"""

url = f"{BASE_URL}/{service}/tools"

response = requests.get(url)

response.raise_for_status()

return response.json()["tools"]

def call_tool(service: str, tool_name: str, tool_args: dict):

"""

Invoke a specific tool on a given service with the provided arguments.

"""

url = f"{BASE_URL}/{service}/call"

payload = {"tool_name": tool_name, "tool_args": tool_args}

response = requests.post(url, json=payload)

response.raise_for_status()

return response.json()["result"]

# List Brave Search tools.

print("=== Brave Search: Available Tools ===")

brave_tools = list_tools("brave_search")

pprint(brave_tools)

# Run a query via Brave Search.

search_tool = brave_tools[0]["name"]

print(f"\nUsing tool '{search_tool}' to search for best tacos in Los Angeles...")

search_result = call_tool(

service="brave_search",

tool_name=search_tool,

tool_args={"query": "best tacos in Los Angeles"}

)

print("Web Search Results:")

pprint(search_result)

# List Fetch tools.

print("\n=== Fetch Service: Available Tools ===")

fetch_tools = list_tools("fetch")

pprint(fetch_tools)

# Fetch a URL.

fetch_tool = fetch_tools[0]["name"]

print(f"\nUsing tool '{fetch_tool}' to fetch https://example.com...")

fetch_result = call_tool(

service="fetch",

tool_name=fetch_tool,

tool_args={"url": "https://example.com"}

)

print("Fetch Results:")

pprint(fetch_result)

6. Production deployment with Anyscale service#

For production deployment, use Anyscale services to deploy the Ray Serve app to a dedicated cluster without modifying the code. Anyscale ensures scalability, fault tolerance, and load balancing, keeping the service resilient against node failures, high traffic, and rolling updates.

Use the following command to deploy the service:

anyscale service deploy multi_mcp_ray_serve:app --name=multi_mcp_tool_service

Note:

This Anyscale Service pulls the associated dependencies, compute config, and service config from the workspace. To define these explicitly, you can deploy from a config.yaml file using the -f flag. See ServiceConfig reference for details.

5. Query the production service#

When you deploy, you expose the service to a publicly accessible IP address which you can send requests to.

In the preceding cell’s output, copy your API_KEY and BASE_URL. As an example, the values look like the following:

BASE_URL = “https://multi-mcp-tool-service-jgz99.cld-kvedzwag2qa8i5bj.s.anyscaleuserdata.com”

TOKEN = “z3RIKzZwHDF9sV60o7M48WsOY1Z50dsXDrWRbxHYtPQ”

Fill in the following placeholder values for the BASE_URL and API_KEY in the following Python requests object:

import requests

from pprint import pprint

# Configuration

BASE_URL = "https://multi-mcp-tool-service-jgz99.cld-kvedzwag2qa8i5bj.s.anyscaleuserdata.com" # Replace with your own URL

TOKEN = "z3RIKzZwHDF9sV60o7M48WsOY1Z50dsXDrWRbxHYtPQ" # Replace with your own token

HEADERS = {

"Authorization": f"Bearer {TOKEN}"

}

def list_tools(service: str):

"""

Retrieve the list of available tools for a given service.

"""

url = f"{BASE_URL}/{service}/tools"

response = requests.get(url, headers=HEADERS)

response.raise_for_status()

return response.json()["tools"]

def call_tool(service: str, tool_name: str, tool_args: dict):

"""

Invoke a specific tool on a given service with the provided arguments.

"""

url = f"{BASE_URL}/{service}/call"

payload = {"tool_name": tool_name, "tool_args": tool_args}

response = requests.post(url, json=payload, headers=HEADERS)

response.raise_for_status()

return response.json()["result"]

# List Brave Search tools.

print("=== Brave Search: Available Tools ===")

brave_tools = list_tools("brave_search")

pprint(brave_tools)

# Perform a search for "best tacos in Los Angeles".

search_tool = brave_tools[0]["name"]

print(f"\nUsing tool '{search_tool}' to search for best tacos in Los Angeles...")

search_result = call_tool(

service="brave_search",

tool_name=search_tool,

tool_args={"query": "best tacos in Los Angeles"}

)

print("Web Search Results:")

pprint(search_result)

# List Fetch tools.

print("\n=== Fetch Service: Available Tools ===")

fetch_tools = list_tools("fetch")

pprint(fetch_tools)

# Fetch the content of example.com

fetch_tool = fetch_tools[0]["name"]

print(f"\nUsing tool '{fetch_tool}' to fetch https://example.com...")

fetch_result = call_tool(

service="fetch",

tool_name=fetch_tool,

tool_args={"url": "https://example.com"}

)

print("Fetch Results:")

pprint(fetch_result)